Vibe Coding in 2025: A Guide to AI-Augmented Development Workflows

Or how to avoid disclosing your secret API-key

The paradigm of software development is undergoing a fundamental shift. The integration of AI is no longer a novelty but a core component of the modern developer’s toolbox, moving beyond simple code completion to enabling true cognitive offload and architectural innovation.

This guide examines the 2025 ecosystem of platforms, models, and workflows that define state-of-the-art, AI-augmented development.

WARNING: This report is produced with the support of Deepseek V3.2

Defining “Vibe Coding”: The State of Augmented Flow

“Vibe coding” represents an optimized developer state characterized by minimal context switching, deep focus, and seamless human-AI collaboration. It is achieved when tooling friction approaches zero, allowing the developer to operate at the level of intent while AI handles implementation details, boilerplate, and systematic exploration.

Core Development Environments: The AI-Native IDE Landscape

1. Agent-First Integrated Development Environments (IDE)

Cursor has established itself as the leader in agentic IDEs. Built upon a fork of VS Code’s Monaco editor, its core innovation is the Agent Mode, which performs planned, multi-file operations using a persistent workspace context. The Ctrl+K command palette acts as a unified interface for both imperative edits and conversational development, creating a cohesive workflow.

Zed prioritizes performance and collaboration. Its Rust-based architecture delivers sub-millisecond input latency, while its multiplayer features enable real-time collaborative editing. The AI integration (currently leveraging Claude) is designed as a low-friction sidebar, maintaining the editor’s minimalistic philosophy while providing precise, context-aware assistance.

Windsurf represents the cloud-native branch of development. It treats the Git repository as the primary abstraction, with deep integrations for GitHub PRs, issues, and branches. Its AI operates natively within this context, suggesting changes that align with feature branches and project management workflows.

2. Cloud Development Environments (CDEs) & Full-Stack Platforms

GitHub Codespaces / GitPod provide deterministic, ephemeral environments. By containerizing the entire development stack (OS, dependencies, toolchain), they eliminate “works on my machine” issues and enable rapid context switching between projects or branches. This is particularly valuable for CI/CD pipelines and standardized team environments.

Replit operates as an integrated application platform. Beyond its browser-based IDE, it provides hosted databases, serverless deployment, and a package ecosystem. Its b0 CLI tool extends this environment to local machines, blurring the line between local and cloud development.

Cline (by Cognition) is an environment designed for autonomous AI agents. It provides a sandbox where agents like Devin can access tools (terminal, browser, editor) to execute complex, multi-step engineering tasks based on high-level human prompts, representing the frontier of agentic workflows.

AI Model Ecosystem: Strategic Selection for Specific Tasks

The modern approach employs a portfolio of models, each selected for its comparative advantage in particular tasks.

Claude 3.5 Sonnet / Opus (Anthropic): Excels at complex reasoning, system design, and nuanced instruction following. Its strength lies in understanding architectural intent and generating coherent, multi-file implementations. Best used for greenfield projects and major refactors.

OpenAI o1 Series: Engineered for verifiable correctness and step-by-step reasoning. It shows superior performance on logic-heavy tasks, algorithm implementation, and debugging chain-of-thought problems. Its outputs tend to be more deterministic and less conversational.

DeepSeek Coder V2 / R1: A leading open-weight model with strong performance across benchmarks. Its 16B and 67B parameter variants offer an excellent balance of capability and efficiency, making them ideal for local deployment via Ollama or LM Studio.

Local Models (Codestral, Qwen2.5 Coder, Llama 3.1 Coder): Provide zero-latency completion, full data privacy, and no API costs. Essential for inline completions and sensitive codebases. The trend in 2025 is toward smaller (7B-13B), highly capable models that can run efficiently on consumer hardware.

Specialized Tools (Aider, Smithery): CLI-based agents that operate directly on a Git repository. They accept natural language change requests, plan modifications, and commit changes with descriptive messages, automating the “code review request” workflow.

Google Gemini 3 Pro in AI Studio: The Strategic Prototyping Tool

Gemini 3 Pro within AI Studio serves as a dedicated multimodal reasoning environment, not a daily driver IDE.

Technical Advantages:

Native Multimodal Processing: Unmatched at interpreting visual inputs—screenshots, diagrams, wireframes—and generating corresponding code or documentation.

Massive Context Handling: Effective utilization of its 1M+ token context window for analyzing entire codebase snapshots.

Structured Output Generation: Particularly strong at generating well-formatted JSON, YAML, Protobuf schemas, and other configuration formats.

Operational Role: It functions best as a companion application for architectural sessions, complex debugging with visual logs, or learning new frameworks. The workflow involves using Gemini for design and exploration, then transitioning the validated concepts to an agentic IDE (Cursor, Windsurf) for implementation and integration.

The Legacy of OpenAI Codex: Historical Context

OpenAI Codex (2021) was the foundational model that demonstrated the viability of large-scale code generation. Fine-tuned from GPT-3, it powered the initial release of GitHub Copilot.

Why It’s Obsolete in 2025:

Architectural Limitations: A decoder-only transformer with 12B parameters, lacking modern advancements like grouped-query attention, Mixture of Experts (MoE), or dedicated reasoning modules.

Context Constraints: 8K token limit versus today’s standard 128K-1M+.

API Deprecation: Officially deprecated by OpenAI in March 2023.

Capability Gap: Its ~37% pass@1 accuracy on HumanEval benchmarks is surpassed by modern models achieving 70-85%.

Codex’s significance is historical: it validated the market and established the pattern of in-IDE AI assistance. All current tools are iterations on its core premise with orders-of-magnitude improvements.

Composing Your 2025 Development Stack

The most effective developers orchestrate specialized tools rather than relying on a single solution.

Example Technical Stack 1: The High-Velocity Solo Developer

Primary IDE: Cursor

Primary AI: Claude 3.5 Sonnet (via Cursor’s agent) for planning and complex tasks

Local Completions: Codestral (via Ollama) for sub-50ms inline suggestions

Cloud Sandbox: GitHub Codespaces for dependency-heavy testing

Specialized Tool: Aider for automated, Git-aware refactoring scripts

Example Technical Stack 2: The Cloud-Native Team

Primary Environment: Windsurf or GitPod

Model: GPT-4o (native integration) for general tasks

Code Review: Cline agent for automated PR analysis and suggestion generation

Diagram-to-Code: Gemini 3 Pro in AI Studio for interpreting architecture diagrams

The Year AI Went From Chatbots to Coworkers

If 2024 was the year we marveled at AI’s potential, 2025 was the year it started doing actual work. The shift was palpable: reasoning models became standard features, agents began executing tasks autonomously, and the line between “AI assistant” and “AI employee” started to blur.

Looking back, 2024 feels almost quaint by comparison. What felt revolutionary then—ChatGPT’s conversational abilities, early multimodal models—now seems like table stakes. In 2025, we didn’t just get better models. We got models that think longer, act independently, and integrate into real workflows.

The Breakthroughs That Defined the Year

DeepSeek R1 kicked things off in January, proving that cutting-edge reasoning capabilities could be open-sourced and deployed at scale. The global stock market reacted accordingly, signaling that AI development had truly democratized.

The agent revolution arrived in waves. OpenAI’s Operator launched as a research preview, demonstrating a browser-using agent that could click, scroll, type, and complete web tasks without human intervention. By July, this evolved into the ChatGPT agent, which brought execution capabilities directly into the familiar chat interface. The promise was simple but profound: tell it what you want done, and it figures out how to do it.

Not to be outdone, Perplexity launched Comet, their AI browser that didn’t just search the web—it browsed, explained, and automated tasks within the browsing flow itself. By October, what started as an invite-only novelty was rolling out to mainstream users.

Reasoning became a feature, not a benchmark. Anthropic introduced Extended Thinking with Claude 3.7 Sonnet, treating reasoning as a “thinking budget”—spend more computational time on harder problems. Google pushed hard into thinking models with Gemini 2.5, and the entire industry followed suit.

The gold medal moments at the International Mathematical Olympiad and International Olympiad in Informatics—where both OpenAI and Google DeepMind achieved gold-medal standard performance—weren’t just impressive. They were cultural milestones showing AI could compete with humanity’s best young problem-solvers.

The Model Arms Race Intensified

Throughout the year, major releases came rapid-fire:

Claude 4 (Opus and Sonnet) positioned itself as the choice for serious work—coding, reasoning, and long-running tasks

GPT-5 marked a narrative shift from “wow factor” to ROI, cost-performance, and business value

Gemini 3 emerged as Google’s comprehensive platform play, combining thinking, multimodal capabilities, and agentic coding

Claude Opus 4.5 and Claude Sonnet 4.5 doubled down on computer use and agentic performance

GPT-5.2 and GPT-5.2-Codex specialized in professional work and long-horizon engineering tasks

Llama 4 kept open-weight competition credible at scale

The image and video generation space matured dramatically. Models like Nano Banana Pro created images that were both text-safe and virtually indistinguishable from reality, while Sora’s text-to-video capabilities went viral.

The Infrastructure Emerged

Beyond flashy model releases, 2025 was about building the scaffolding for an agentic future:

Model Context Protocol (MCP) adoption by OpenAI in March signaled that interoperability was becoming real—connect models to tools and data without building bespoke integrations for every use case.

AgentKit launched in October, providing frameworks to build, deploy, and optimize agents for production, not just demos.

METR’s Time Horizon framing gave the industry a clean way to discuss agent endurance—how long can an agent work before failing? Their research suggested a doubling time of roughly seven months, a metric that became increasingly relevant as agents moved from lab experiments to deployed systems.

What It All Means

As Demis Hassabis recently emphasized, AI represents a revolution comparable to the Industrial Revolution, but ten times bigger and ten times faster. That’s not hyperbole anymore—it’s an observation grounded in 2025’s relentless pace of advancement.

The meta-story of the year is clear: reasoning evolved from a niche benchmark into a product feature tied to agents, tools, browsers, and long-term task execution. We moved from “projects that use AI” to “AI that completes projects.”

Looking Ahead to 2026

If 2025 was the prelude, 2026 marks the opening act of AI’s economic integration. The technology is ready to enter businesses as genuine workforce augmentation, not just productivity tools.

The bottlenecks ahead aren’t about making smarter text generators. They’re about:

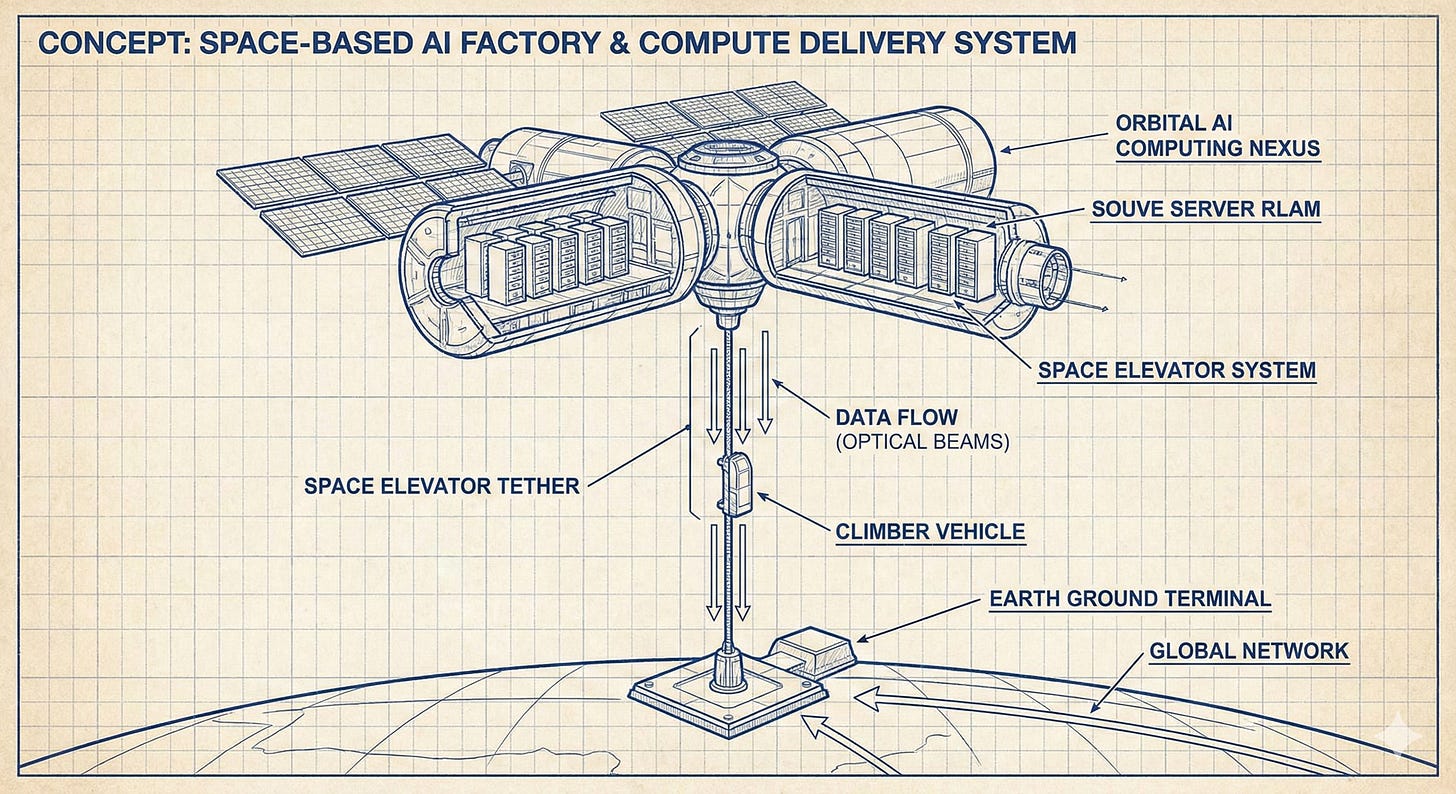

Energy and compute supply to support increasingly demanding workloads

Security challenges like prompt injection and adversarial attacks

Agent governance frameworks to ensure responsible deployment

Tool interoperability standards that actually work at scale

The endgame is visible now: we’re shifting from prompts to projects. From conversations to collaborations. From assistants to agents.

The revolution isn’t coming. It’s here. And 2025 was the year we stopped wondering if it would happen and started figuring out how to work alongside it.

Emerging Trends for Late 2025

Audio-Driven Development: Tools like Audiolab enabling voice conversations with AI about code, reducing typing as a bottleneck for conceptual work.

Persistent Codebase Agents: Long-running AI processes that maintain a living model of the codebase, proactively suggesting optimizations, dependency updates, and security patches.

Parameter-Efficient Fine-Tuning (PEFT) at Scale: The ability to quickly fine-tune a base model (e.g., Llama 3.1 Coder) on a private codebase, creating a company or project-specific expert without full retraining costs.

Integration with Observability Platforms: AI that can correlate production errors (from Datadog, Sentry) directly to source code and suggest fixes.

Conclusion: Strategic Toolchain Composition

The evolution from Codex (2021) to the current ecosystem represents a shift from AI as an autocomplete to AI as a collaborative partner. The 2025 development workflow is defined by:

Intent-Based Interfaces over command memorization

Specialized Model Selection over one-model-fits-all approaches

Deep Environment Integration over separate chat interfaces

Composable Toolchains over monolithic platforms

The optimal setup is not static but evolves with project requirements. The strategic advantage lies in understanding the strengths of each component—whether it’s Gemini’s multimodal reasoning, Claude’s architectural understanding, or a local model’s latency—and composing them into a seamless, augmented workflow.

The core competency for 2025 developers is no longer just writing code, but effectively orchestrating the AI tools that write code with them.

The speed of AI development shows no signs of slowing. Breakthroughs that once took years now arrive weekly. Investments in data centers are unprecedented. And somewhere in a lab right now, someone is building what will make 2025 look as quaint as 2024 does today.

Get ready for 2026 with The Plausible Futures Newsletter:

The "orchestrating specialized tools" framework is exactly right - developers who treat AI as a single monolithic solution are missing the point. The portfolio approach (Claude for architecture, local models for completions, Gemini for multimodal) mirrors how we already use different tools for different tasks. What's interesting is how quickly this became necessary - we went from "one model for everything" to "specialized models for specific contexts" in like 18 months. The subtitle about API key security is lowkey important too, since most vibe coding tutorials skip over the fact that youre essentially giving these tools read access to your entire codebase.